Meta’s impact on fraud and scams

Cyjax’s open-source intelligence team has recently conducted an investigation into Meta’s impact on fraud and scams with regards to advertisements. The investigation consisted of extensive research and data collection from dark web sources, closed and open chats, social media platforms, marketplaces, and threat actor marketing forums.

Do threat actors discuss tactics they use to get fraudulent ads approved by Meta?

Scammers often find ways to exploit loopholes in social media platforms’ automated filters and moderation systems. This allows them to bypass system policies to post fraudulent advertisements which can deceive unsuspecting users. Specific methods or chats containing discussions regarding ads or scams approved by Meta were not found at this time, but they are likely to be found in threat actor groups limited to those operating a scam. Threat actors can be observed advertising bypass methods for sale, however, no publicly shared copies have been located so far. Meta reported in 2023 that it had slightly more than three billion monthly average users worldwide, with estimations made that four to five percent of those accounts are fake. This means there may be as many as 150 million fake accounts in circulation. More recent reports claim that fake Facebook marketplace ads make up more then 34% of all advertisements. Platforms such as Facebook are unlikely to issue refunds to users who have purchased goods from a fake advert. Should the fake advert be taken down, it simply stops gaining revenue. Additionally, the threat actor behind the fake advert is often hard to locate in person.

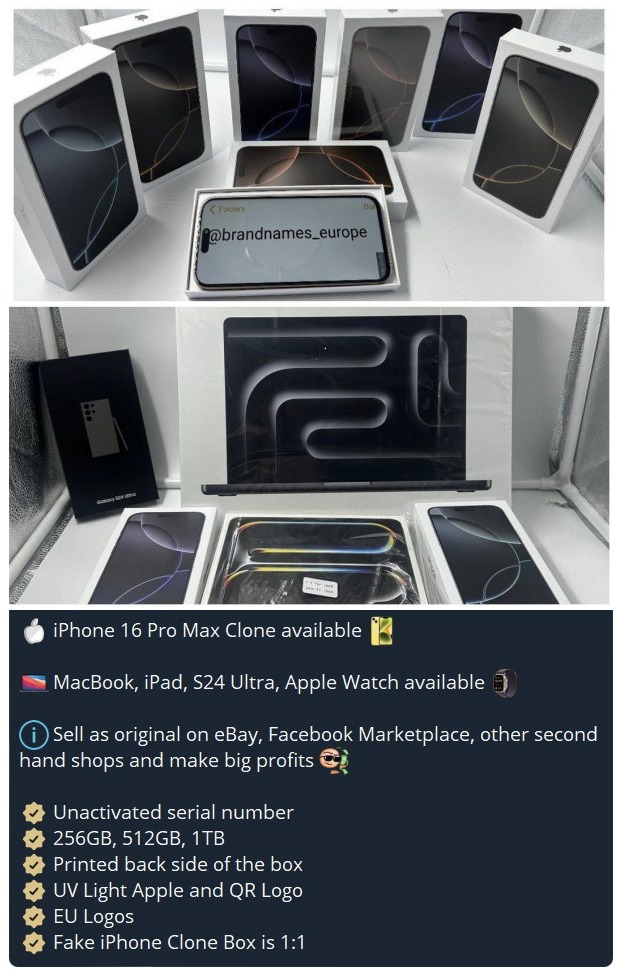

Cyjax noted that some threat actors on platforms such as Telegram offer counterfeit or cloned products to be sold via fake ads claiming they are legitimate or original. For example, fake Apple items such as iPhones are advertised for sale to a threat actor running ad campaigns. The threat actor running the ad campaigns purchases the fake or cloned goods for a low price, with the intention to sell them for the full price of the legitimate item to unsuspecting customers.

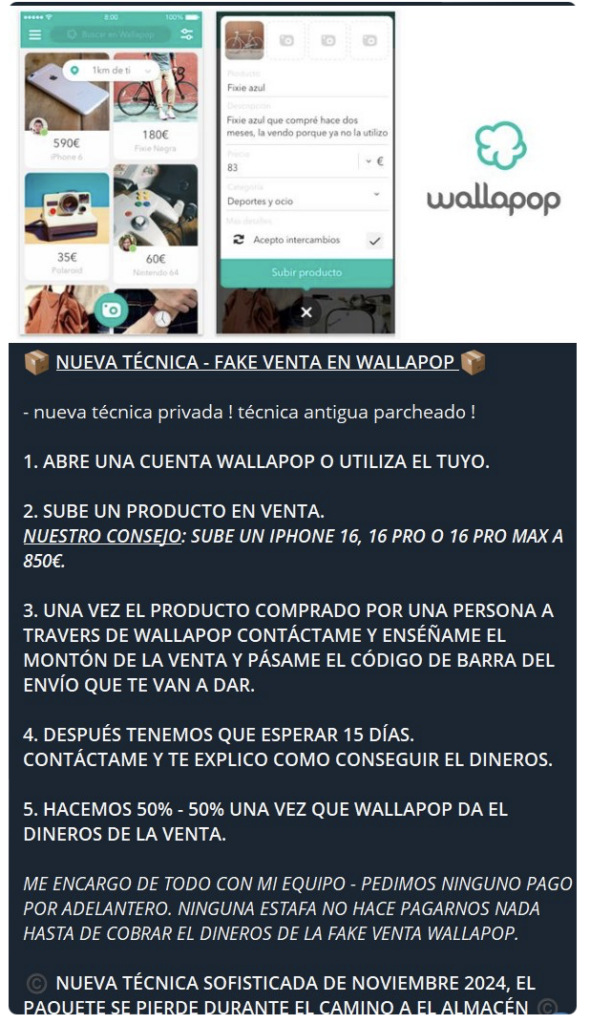

Whilst exact details were not shared, threat actors in Spanish chats can be seen advertising services which assist in the sale of fake products via ads. Although these fake ads are for purchases to be made on the Wallapop app, these techniques may be used to conduct similar operations for other adverts or marketplaces, such as Facebook and Instagram. This is because it provides what appears to be a legitimate ‘get out’ clause. It would appear the technique involves using the shipping barcode to claim the purchased item was “lost on the way to the warehouse“. These threat actors allege that they will deal with Wallapop. However, no in-depth details were shared with regards to what was action was taken with shipping barcodes or what was sent to Wallapop. The end results see the threat actor advertising the fake items and being paid for the fake product, with usually an even split of the payment sent to the scammer who sold the services. No product is ever sent out and the customer is told it was lost in transition, forcing Wallapop to take the blame and issue a refund. With customers believing the fault is not with the brand or account the purchase was made from and the onus being on delivery or transport, the fraudulent adverts tend to stay up for longer without being shut down for mass complaints or reporting.

The above post translates from Spanish to:

“NEW TECHNIQUE – FAKE SALE ON WALLAPOP

– new private technique! old technique patched !

1. OPEN A WALLAPOP ACCOUNT OR USE YOURS.

2. UPLOAD A PRODUCT FOR SALE.

OUR ADVICE: UPGRADE AN IPHONE 16, 16 PRO OR 16 PRO MAX TO €850.

3. ONCE THE PRODUCT HAS BEEN PURCHASED BY A PERSON THROUGH WALLAPOP, CONTACT ME AND SHOW ME THE AMOUNT OF THE SALE AND GIVE ME THE SHIPPING BARCODE THAT THEY ARE GOING TO GIVE YOU.

4. THEN WE HAVE TO WAIT 15 DAYS.

CONTACT ME AND I WILL EXPLAIN TO YOU HOW TO GET THE MONEY.

5. WE MAKE 50% – 50% ONCE WALLAPOP GIVES THE MONEY FROM THE SALE.

I TAKE CARE OF EVERYTHING WITH MY TEAM – WE ASK FOR NO ADVANCE PAYMENT. NO SCAM DOES NOT MAKE US PAY ANYTHING UNTIL COLLECTING THE MONEY FROM THE FAKE WALLAPOP SALE.

NEW SOPHISTICATED TECHNIQUE FROM NOVEMBER 2024, THE PACKAGE IS LOST ON THE WAY TO THE WAREHOUSE“

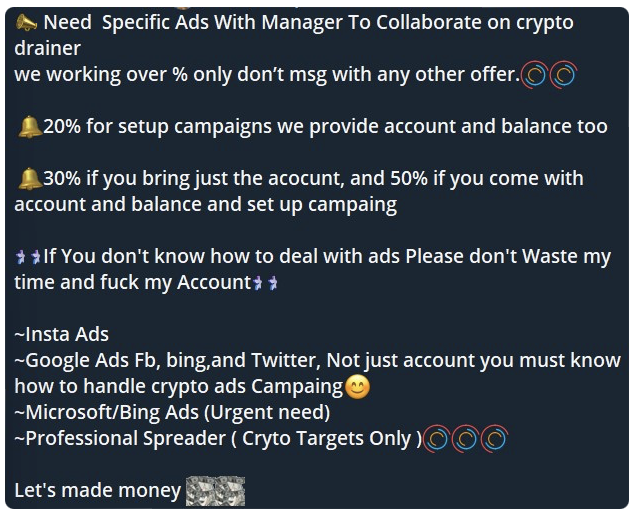

Requests were posted by a threat actor recruiting for assistance with a scam which involves specific ads in order to collaborate on cryptocurrency drainer fraud. This scammer offers various percentages of payment depending on the level of work or information the recruited threat actor can provide. This scam is intended to be used alongside ads for Instagram, Facebook, X, and Google, as per the screenshot below. Cyjax will continue to monitor these requests and potential further information for a more in-depth analysis as to how this scam is operated.

Are there specific tools or techniques that threat actors use to bypass Meta’s check for ad approval?

As mentioned above, analysts have made investigations into dark web sources, closed and open chats, social media platforms, and marketplaces, including Blackhat and unethical/threat actor marketing forums. This investigation was conducted for any references to tools, tactics. or techniques which may be used to bypass Meta’s checks in order to have a counterfeit or fake advert approved. At the time of writing, none were found; however, specific methods or chats containing discussions regarding this may likely be found in threat actor fraud groups limited to those operating the scam. Entry to these areas often requires invitation via the admin to those known in the threat group operating the scam.

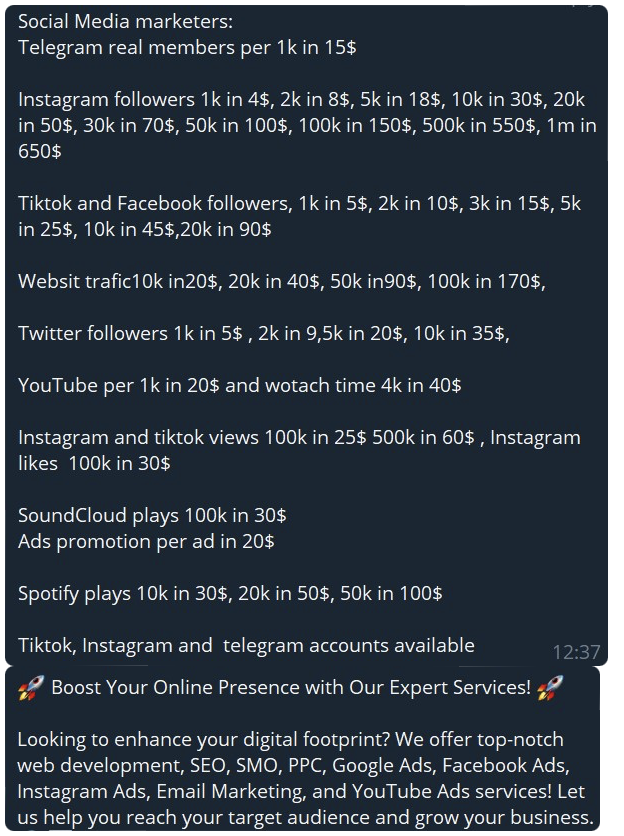

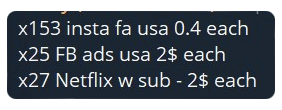

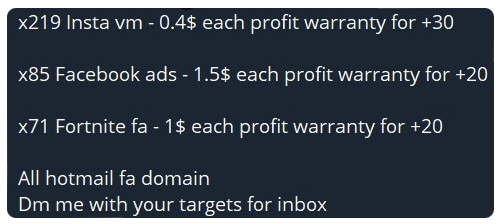

Fraudsters providing services aimed at assisting other threat actors to facilitate fraud via social media ads and marketing were observed. These services may assist with followers, account traffic, email marketing, video watch times, post or video views, social media ads, and promotions. All of this aids in making a counterfeit campaign or account appear more legitimate. The option to purchase an account that has already been made can also be observed.

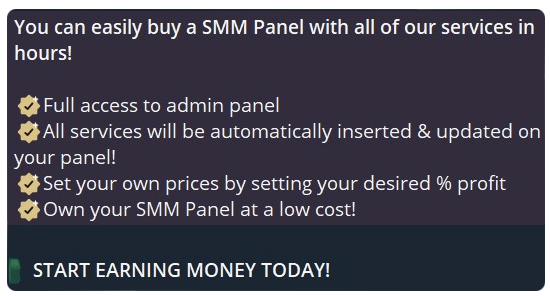

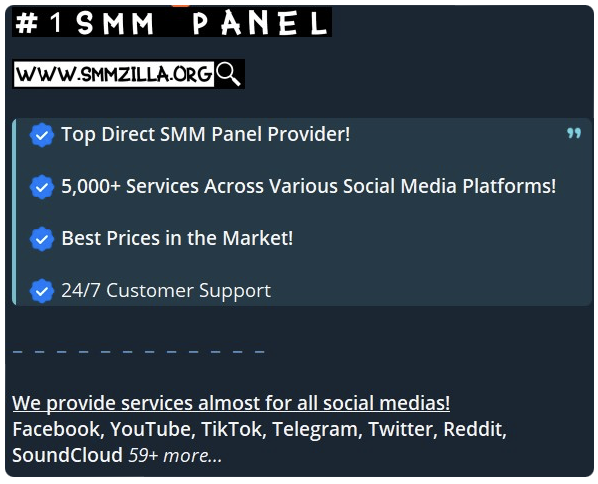

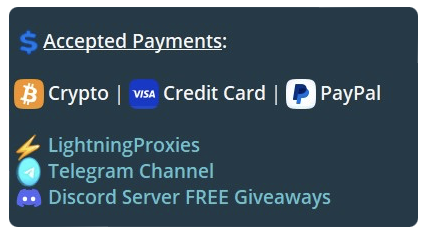

Threat actors have also been observed advertising services in Social Media Marketing panels (SMM) to assist scammers wanting to run Meta ad campaigns. An SMM panel is a web-based platform which is designed to facilitate the management and optimisation of social media marketing campaigns. It does this by building a company’s brand, increasing sales, and driving website traffic. These platforms cater to businesses, influencers, and individuals seeking to enhance their social media presence, engage with their audience, and expand their reach. Threat actors may use SMM panels combined with bot accounts to boost their chances of a successful fraudulent and counterfeit campaign. Should an account be found to be in violation of a given platform’s policies when using an SMM panel, it can result in the suspension or removal of the social media profiles. Some threat actors advertising SMM services also offer 24/7 customer support as a way to gain further customers. Payments for SMM panels are requested via cryptocurrency, PayPal, and credit card, which are among the most favoured.

What are the main payment methods used by threat actors?

From observations made so far, it would appear that cryptocurrency, PayPal, prepaids, credit cards, Apple Pay, Google Pay, and banking apps which allow international payments, such as Wise, are among the most favoured by threat actors seeking payment for counterfeit, fake, or scam ads. These payment options are also used to pay for said ads. Other threat actors do not mention payment methods, instead listing the cost per advertisement. This is often seen in US dollars.

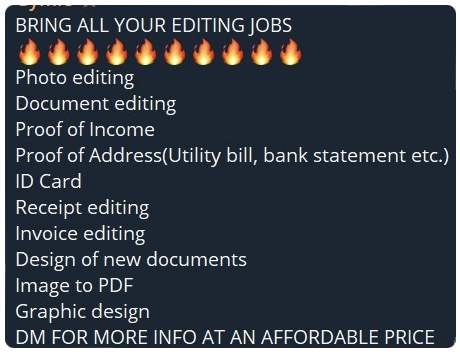

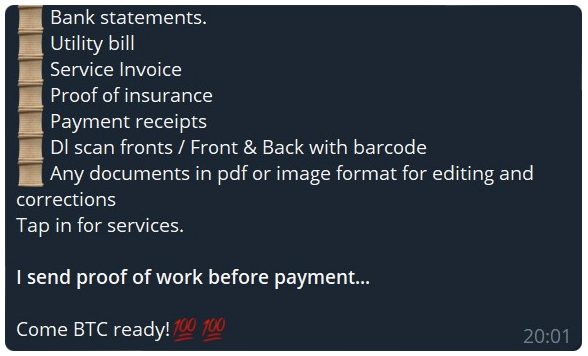

Some threat actors can be seen seeking copies of PDF files or invoices for certain brands relevant to their campaign. This is for use when billing customers as a further way of making the account look as legitimate as possible. Other fraud threat actors offer services which facilitate the assistance of creating counterfeit invoices, photo editing, graphic design, and more, to create legitimate looking campaigns. This includes fake ads for Meta with a higher success rate.

What strategies do threat actors use to make scam ads appear more legitimate?

Threat actors typically seek to re-purpose official content to support their own campaigns. By doing this, the time it takes to commence a campaign is significantly reduced. By using official content, such as from the UGG page, users who may be seeking this product could easily be fooled by official branding, badges, banners, certification, and more that has been copied over to the fake advert. Threat actors will also use the same hashtags, text style, and messaging used in ads from official sources. This is an attempt to establish some form of credibility, with as few noticeable differences as possible. In terms of usernames, the scam operators will seek to use the official brands name in some way, alongside official, UK, US, or English. Examples include @BANKUK, @BANKEng, and @BANKOfficial. It is also common to see accounts using foreign brand locations in areas known to be cheaper, enticing unsuspecting customers into thinking they obtained a sort of warehouse, direct source, or cheaper alternative of the legitimate product. Examples of this would be @MichaelKorsCH (Michael Kors China), @MichealKorsPL (Michael Kors Philippines), and @MichaelKorsMX (Michael Kors Mexico).

Another similar technique observed on Facebook is an advert posted by what appears to be a normal and genuine user account. This account will share details of a discounted product, with the discount allegedly being obtained through a family member or friend that works at said store where the goods are sold. This user will then go on to share a link to the website where this discount can be used to buy the product. However, the website provided is usually counterfeit and is used to steal money and potential details of any unsuspecting victims. Comments seen on these type of scam adverts are usually bot accounts or users in collusion with the scam. They are made to appear as real Facebook users with genuine purchases. These comments are all of a similar nature and brag of the company or product being great, sometimes posting a picture of the alleged received goods to mimic a product review. This increases the chance of the advert appearing more legitimate.

Historically, from the monitoring Cyjax has done for brands within the retail space, we have seen examples of threat actors raising several fake accounts to promote, like, and interact with advertisements, posts, and videos. On Facebook in particular, these users also share fraudulent reviews claiming they received the product on time and as described.

In one instance Cyjax investigated, a threat actor created an account for a large UK retailer offering discounted PlayStations through a fake outlet on Facebook. The threat actor used over 30 bot accounts to generate fake reviews. Upon investigation, Cyjax found that these accounts were all linked to a generic, newly created user whose likes mirrored those of the bot accounts. Fake accounts or ads which have bot reviews to boost engagement and appear more legitimate can often be spotted by comments that all have wording of a similar nature. For example, “love this product so good“, “product is so good, love it“, “love it“, and “so good“. It is not uncommon to see mass comments which have been pasted by bot accounts containing a few words which are of no relevance to the post. However, if users do not click on the comments to gauge the authenticity of the accounts posting them, they may only see the advert as having “9k likes, 10k comments“, leading to them to believe it is a genuine advert with legitimate interactions. When mesmerised by the product marketing or gimmicks and potentially low advertised prices, this can be enough to make someone click the advert. A trending product is likely to have more hits of this nature, such as the Stanley Cup. Younger generations may not want to miss out on a current trend, leaving them vulnerable to adverts or accounts masquerading as the real brand.

Another recent trend used by threat actors is the adoption of AI in creating fake celebrity pages, with said influential figure using, endorsing, or selling the product. AI tools may be used to superimpose a celebrity’s face onto a made up advert or video, which is then shared online across multiple social media platforms. These celebrities are often in an influencer style position to certain generations, with fake accounts used to trick masses of people into believing a product is good, sought after, or legitimate. An example of this includes deepfakes which were used to imitate Joe Rogan and push products such as supplements for men, gym goers, or male libido-boosting supplements. Others may be targeted via the use of counterfeit makeup up ads, using a member of the Kardashian family for example. These beauty products often have deals that are too good to be true, with legitimate makeup brands being far more expensive.

How do threat actors increase levels of engagement?

Threat actors have historically been willing to target any consumer product they consider could drive traffic toward their online pages. Within threat actors chat rooms, Cyjax has seen more established threat actors urging their “youngers” to be more opportunistic in what they target. Whilst targeting items such a PS4s, designer goods, and watches may generate more income, through the promotion and sale of non-existent Christmas stocking, threat actors have a more safer income stream. Threat actors believe possible victims are less hesitant to buy a product under a £50 price tag with less time put into researching the product. In contrast, higher ticket items draw more suspicion and thought from customers before they make noticeable purchases.

With that said, particularly amongst new threat actors, fake vendors of games, consoles, and designer goods tend to increase at this time of the year, especially in the US. Over the past week, Cyjax has seen a rise in the number of fake accounts promoting the sale of refurbished ‘like new’ consoles which are promoted to gamers, teens, and single mothers. They have also been promoted to money saving and financial advice chats or accounts. These adverts are typically backed up with fake posts from the threat actors bot network to boost engagement and activity. As these posts generate interest, unwitting users engage with the fake vendors and believe it is legitimate. In many of the cases Cyjax has found, especially in the US, threat actors use their personal bot networks to drive interest in said advert. This then drives natural engagement with victims. Some of these bots will reply to legitimate user comments to create fake conversations of discussion, once again making the advert look real.

From what Cyjax has seen, when a would-be victim does directly message the threat actor, they are very responsive and aggressive. Here, threat actors use the tactic of discounts running out to close the fake sale. Some accounts attempt to be friendly and personal in direct messages to appear as humanely and kind as possible, portraying themselves as an unsuspecting, helpful, and eager to please business owner. Extra discounts may also be offered, such as further 10% off, by users simply filling in their details into the payment section in the link provided.

Alongside threat actors who sell non-existing goods, Cyjax found engagement for posts selling counterfeit products gaining significant organic traction. Fake football tops, designer goods, and watches generate significant interest from users online, particularly as the Christmas season approaches.

Facebook also hosts start-up like business pages from legitimate vendors which sell products such as board games or puzzles. These are often quirky, interactive and “fun for the family“, with low price tags. These can sometimes be marketed as “great for friends or social gatherings“, or “cute” gift ideas. These products and their marketing are often quickly copied by threat actors and advertised as their own. The funds are taken from many small purchases for a product that will never arrive. This is sometimes the goal for threat actors, but they may also want to steal victim credentials via fake payment links.

Receive our latest cyber intelligence insights delivered directly to your inbox

Simply complete the form to subscribe to our newsletter, ensuring you stay informed about the latest cyber intelligence insights and news.